Hey Experts,

We have

Jenkins Core : 2.426.3

Java : 11.0.16.1

Our current JAVA_OPTS is set to

export JAVA_OPTS="-DJENKINS_HOME=$JENKINS_HOME \

-XX:ReservedCodeCacheSize=512m -XX:+UseCodeCacheFlushing -Xms$MIN_HEAP_SIZE -Xmx$MAX_HEAP_SIZE \

-XX:+UseG1GC -XX:G1ReservePercent=20 \

-Xloggc:/usr/local/tomcat/logs/jenkins.gc-%t.log -XX:+UseGCLogFileRotation \

-XX:NumberOfGCLogFiles=5 -XX:GCLogFileSize=2M \

-XX:+IgnoreUnrecognizedVMOptions \

-XX:-PrintGCDetails -XX:+PrintGCDateStamps -XX:-PrintTenuringDistribution \

-Dhudson.ClassicPluginStrategy.useAntClassLoader=true \

-Dkubernetes.websocket.ping.interval=20000 \

-Dhudson.slaves.SlaveComputer.allowUnsupportedRemotingVersions=true \

-Djava.awt.headless=true -Dhudson.slaves.ChannelPinger.pingIntervalSeconds=300"

export JAVA_OPTS="$JAVA_OPTS -Dhudson.model.DirectoryBrowserSupport.CSP=\"default-src 'none' netdna.bootstrapcdn.com; img-src 'self' 'unsafe-inline' data:; style-src 'self' 'unsafe-inline' https://www.google.com ajax.googleapis.com netdna.bootstrapcdn.com; script-src 'self' 'unsafe-inline' 'unsafe-eval' https://www.google.com ajax.googleapis.com netdna.bootstrapcdn.com cdnjs.cloudflare.com; child-src 'self';\" -Dcom.cloudbees.hudson.plugins.folder.computed.ThrottleComputationQueueTaskDispatcher.LIMIT=100"

where MIN_HEAP_SIZE = 12288m & MAX_HEAP_SIZE = 200G.

We are seeing

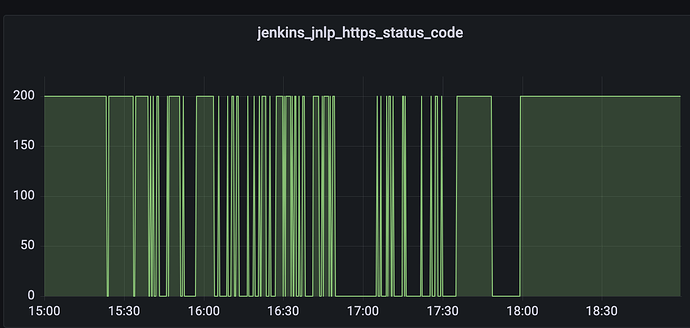

java.lang.Exception: The server rejected the connection: None of the protocols were accepted

even though the Mange Jenkins → Security → Agent Protocol → Inbound TCP Agent Protocol/4 TLS Encryption is enabled and intact.

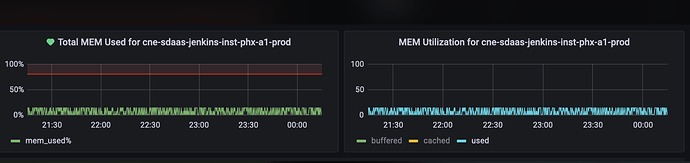

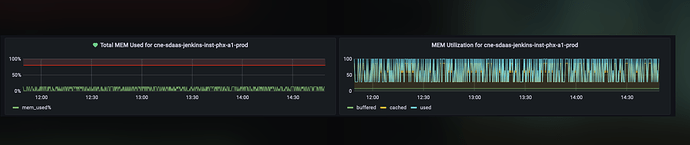

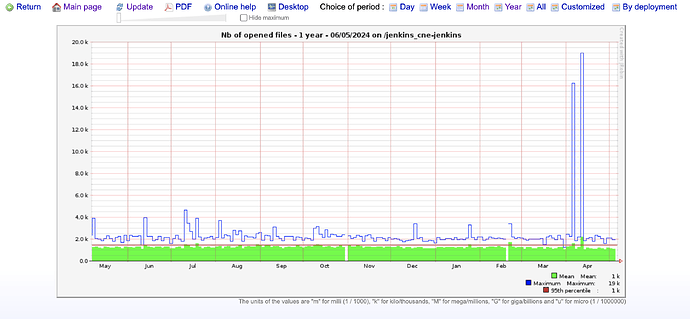

Also, we are seeing the port 50000 toggle on our monitoring dashboard when the event occurs. (We suspect that the JVM’s constrained memory usage is responsible for this behavior, leading to the 50000 port being pulled down and resulting in JNLP connection errors.)

We are seeking assistance on the following:

- The correlation between JNLP and JVM memory usage.

- Whether the JVM prioritizes cached memory over actual memory usage.

- Any additional factors that could influence port 50000 for JNLP connections in Jenkins.

Regards

Hema